With the unprecedented volume of data and devices connected to the internet, cloud and AI services that automate and speed up innovation through insights are no longer enough. The data is now created at a never-before-seen complexity and scale, which have outgrown both the infrastructure and network.

Simply sending all data to a centralized data center, or even through the cloud will cause latency and bandwidth issues. This is where edge computing steps in, by offering a much more efficient alternative - processing and analyzing data closer to where it’s being created.

In this guide, we will cover:

- How does edge computing work?

- A brief history of edge computing

- Edge computing vs cloud computing vs fog computing

- Why is edge computing important?

- Edge computing applications

How does edge computing work?

When it comes to traditional business computing in which data is produced at endpoints, such as user computers, the data is then moved across a WAN - like the internet - through a LAN, where data is stored and worked by a company application. The results are then reported back to the user endpoint.

The large volume of devices connected to the internet nowadays, however, is also producing a large amount of data - all growing too quickly for a traditional computing approach. According to Gartner, by 2025 around 75% of data generated by businesses will be created outside of centralized data centers. This volume of data can be disruptive to operations, leading to a streamlined approach being needed.

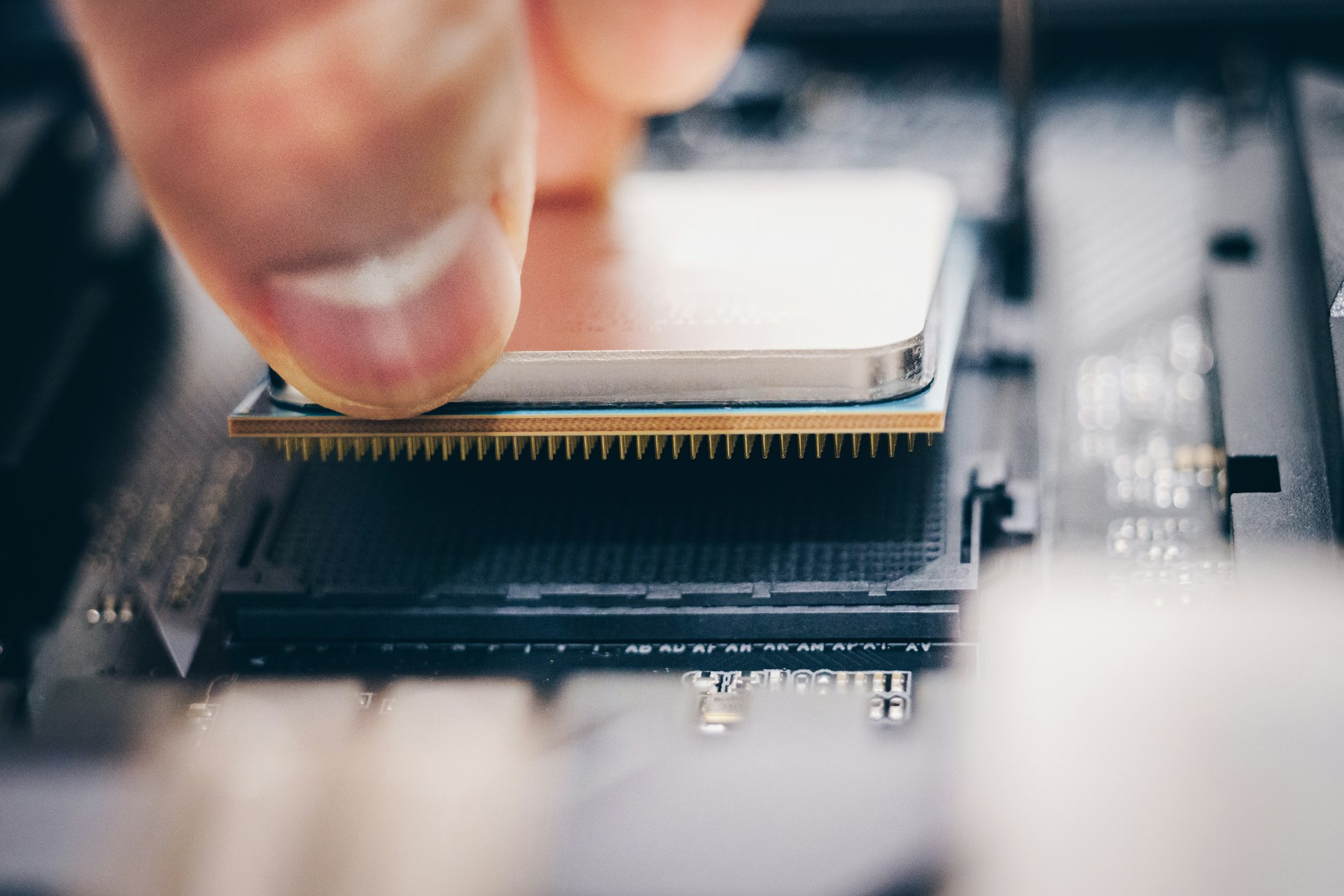

Have a look at how CPUs are revolutionizing both vision and edge AI applications:

If the data can’t get closer to the data center, then the data center will get closer to the data. Edge computing moves servers and storage to where the data is. The implementation of edge computing needs to consider how it’ll be maintained, with factors such as:

- Connectivity. Control and reporting need to be considered for access, even when there’s no available connection to the data. A way to balance this can be by using a secondary connection as a backup.

- Security. Security protocols need to be taken into consideration through tools that highlight intruder detection and prevention, alongside vulnerability management. This also has to include IoT (Internet of Things) devices and sensors, due to each separate device being an element of the network that can be accessed.

- Physical maintenance. IoT devices have limited lifespans, requiring the replacement of components and maintenance in order to keep them running.

- Management. Some locations at the edge can be in remote locations, which translates to the need for remote managing so that businesses can see what’s happening at the edge and control deployment.

Read more about how edge computing deployment relies on specialized hardware and software:

A brief history of edge computing

1990s: Akamai launches the content delivery network (CDN), with the idea of introducing nodes at locations that are geographically closer to end-users in order to deliver cached content like images and videos.

1997: Nobel et al. demonstrate in their work titled Agile application-aware adaptation for mobility, how different application types (video, web browser, speech recognition) that run on resource-constrained mobile devices can offload some tasks to powerful servers (surrogates). The aim was to alleviate the load on computing resources and, as proposed later on in further work, to improve the battery life of mobile devices.

2001: Satyanarayanan et al., in reference to pervasive computing, generalizes the approach set by Nobel et al. in the paper, Pervasive computing: vision and challenges. Decentralized and scalable applications use different peer-to-peer overlay networks. The self-organizing overlay networks allow for fault-tolerant and efficient routing, load balancing, and object location. Additionally, they also allow the exploitation of the network proximity of underlying physical connections on the internet, which avoids long-distance links between users. This both decreases the overall network load and improves the latency of applications.

2006: Cloud computing, a big influencer in edge computing, starts getting particular attention. Amazon first promotes its ‘Elastic Compute Cloud’, which paves the way for new opportunities for computation, storage capacity, and visualization.

2009: Satyanarayanan et al. introduce the term ‘cloudlet’ in the paper titled, The case for VM-based cloudlets in mobile computing. The big focus of the work is latency, proposing a two-tier architecture. The first tier, the cloud, offers high latency, and the second tier, the cloudlets, offer lower latency. Cloudlets are decentralized and widely dispersed internet infrastructure components, with storage resources and compute cycles that can be leveraged by mobile computers in proximity. Cloudlets also just store cached copies of data and other soft states.

2012: The term ‘fog computing’ is introduced by Cisco. The term is used to describe dispersed cloud infrastructures, with the goal being to promote IoT scalability in order to handle the large number of IoT devices and data volume for real-time, low-latency applications.

Want to know more about AI? Read our guide below:

Edge computing vs cloud computing vs fog computing

Edge computing, cloud computing, and fog computing are all interconnected terms - but they’re not the same thing. They all refer to distributed computing and the physical deployment of storage and compute resources, however, the difference is where the resources are located.

What is edge computing?

Edge computing is essentially the deployment of storage and compute resources where the data is being produced, placing both storage and compute at the same location as the source of data at the network edge. The edge network has:

- Provider core. The traditional “non-edge” tiers, which belong to public cloud providers.

- Service provider edge. Located between the regional or core data centers and the last mile access, these tiers are usually owned by internet service providers.

- End-user provider edge. These tiers are on the end-user side of the last mile access, and can have the enterprise edge or the consumer edge.

- Device edge. Non-clustered systems that connect to sensors directly through non-internet protocols, representing the network’s far edge.

What is cloud computing?

Cloud computing is the deployment of storage and compute resources in a large and scalable way, in one of many regions. Cloud computing also tends to include services for IoT operations, which in turn often makes the cloud a preferred method of centralized deployments.

This doesn’t, however, place analysis facilities where the data is being collected, making the cloud reliant on internet connectivity. Cloud computing can, then, be a complement to traditional data centers, but it can’t put centralized computing at the network edge.

What is fog computing?

If cloud computing storage is too far away but an edge deployment is too resource-limited, fog computing usually steps in. It takes a step back by placing storage and compute resources within the data, but not at the data.

Fog computing can generate enormous amounts of sensor IoT data across large physical areas that are too big to define on the edge. Sometimes one single edge deployment isn’t enough, which leads to fog computing operating several fog node deployments in the environment in order to collect, process, and analyze data.

Edge and fog computing are often used interchangeably, as they can be similar in architecture and definition.

Read more about what fog computing is below:

Why is edge computing important?

With the increasing need for fast, often real-time, and responsive networks, edge computing comes in as a response to the three major challenges.

Latency

Even though communication can occur at the speed of light, there can still be outages and network congestion when sending data between two points on a network. Latency majorly slows down decision-making processes and analytics, which in turn will impair the system’s capacity for real-time responses.

Bandwidth

Typically measured in bits per second, bandwidth is related to how much data a network is able to carry over time. Networks have limited bandwidth, especially when it comes to wireless communication. This limits the amount of data and number of devices that can communicate in the network, making it an expensive endeavor to scale up and increase bandwidth.

Congestion

Both the enormous volume of data and the number of devices connected to the internet can cause congestions and data retransmission, the latter of which is time-consuming. Network outages, for example, can also worsen congestions and sever communication.

Edge computing applications

Network optimization

With edge computing, the network performance can be optimized by measuring performance for users across the internet. The analytics can then be employed to determine what is the most reliable, low-latency network path for each user’s traffic. Edge computing ‘steers’ the traffic across the network in order to provide the best time-sensitive traffic performance.

Workplace safety

By combining and analyzing data from on-site cameras, edge computing can offer higher workplace safety. Workplace conditions can be overseen and businesses can ensure that employees follow safety protocols, particularly when the workplace is remote or dangerous.

Manufacturing

In manufacturing, edge computing can monitor operations and enable machine learning at the edge and real-time analytics, finding production errors and improving the quality of product manufacturing. It can also help in the addition of environmental sensors that offer insights into how components are both stored and assembled.

Want to know more about how industrial AI is changing the manufacturing sector? Read more below:

Healthcare

Patient data collection and respective devices have substantially expanded. Whether it’s sensors or other medical equipment, there’s a big volume of data that needs edge computing to apply machine learning and automation in order to identify important information.

Our on-demand healthcare interview series offers you expert insights from leaders in the industry.

Farming

In farming, sensors can help businesses to track nutrient density, track water usage, and determine optimal harvest times. This data is then collected and analyzed to pinpoint environmental factor effects, allowing for the continuous improvement of crop growth with algorithms.

Edge AI devices are changing the agricultural sector - read more below:

Retail

With stock tracking, surveillance cameras, sales data, and other real-time information, retail businesses have an enormous volume of data that needs to be analyzed in order to identify business opportunities. Edge computing can be a great solution for local processing at individual stores.

Transportation

With self-driving vehicles processing enormous volumes of data each day, like road conditions and vehicle speed, onboarding computing becomes essential. Each of these vehicles becomes an ‘edge’, helping businesses manage their fleet based on real-time insights.

For more resources like this, AIGENTS have created 'Roadmaps' - clickable charts showing you the subjects you should study and the technologies that you would want to adopt to become a Data Scientist or Machine Learning Engineer.

For more resources like this, AIGENTS have created 'Roadmaps' - clickable charts showing you the subjects you should study and the technologies that you would want to adopt to become a Data Scientist or Machine Learning Engineer.