Autonomy is a big part of achieving interplanetary goals. Both computer vision and deep learning can work together towards it, with computer vision algorithms capable of further improving autonomous performance.

Both computer vision and AI in space exploration reduce the time astronauts have to spend on repetitive tasks, improving their capabilities in execution, perception, information storage, retrieval, task planning, and more.

In this article, we’ll have a look at computer vision in space exploration, specifically:

- Spacecraft docking

- Autonomous precision landing

- Asteroid detection

- Tracking and surveillance of space debris

Spacecraft docking

NASA’s Goddard Space Flight Center, located in Maryland, USA, developed a hybrid computing system to aid in relative navigation and autonomous capability, called Raven. Launched on February 19, 2017 aboard SpaceX’s Dragon spacecraft for a two-year mission, Raven tested and matured visible, LIDAR, and infrared sensors and computer vision algorithms.

Its objective? To help develop technologies that allow for spacecraft to dock autonomously and in real-time. Raven’s sensors worked alongside the SpaceCube processor, which is an extremely fast, reconfigurable computing platform used on the Hubble Servicing Mission-4 in 2009.

Raven was the eyes and SpaceCube the brain, which together created the autopilot ability. During its stay at the International Space Station (ISS), Raven sensed both incoming and outgoing spacecraft, feeding its data to SpaceCube 2.0 – part of the family of SpaceCube products. SpaceCube ran a set of pose algorithms, or instructions, to understand the relative distance between Raven and any spacecraft it tracked.

SpaceCube 2.0 then autonomously sent commands to swivel the Raven module on its pointing system or gimbal so the sensors stayed trained on the space vehicle as it tracked it. On the ground, NASA operators monitored Raven’s tech and made adjustments to its tracking capabilities.

Autonomous precision landing

A computer vision algorithm can help estimate a spacecraft’s relative position when descending. A landmark recognition-based algorithm can then calculate the motion and relative position. Computer vision algorithms play a big role in landing spacecraft, such as:

- Obstacle detection and avoidance

- Routine testing and inspection

- Payload deployment and retrieval

- Monitoring the loading and unloading of equipment

- Excavation and mining

- Terrain map building and surveillance of planet surfaces

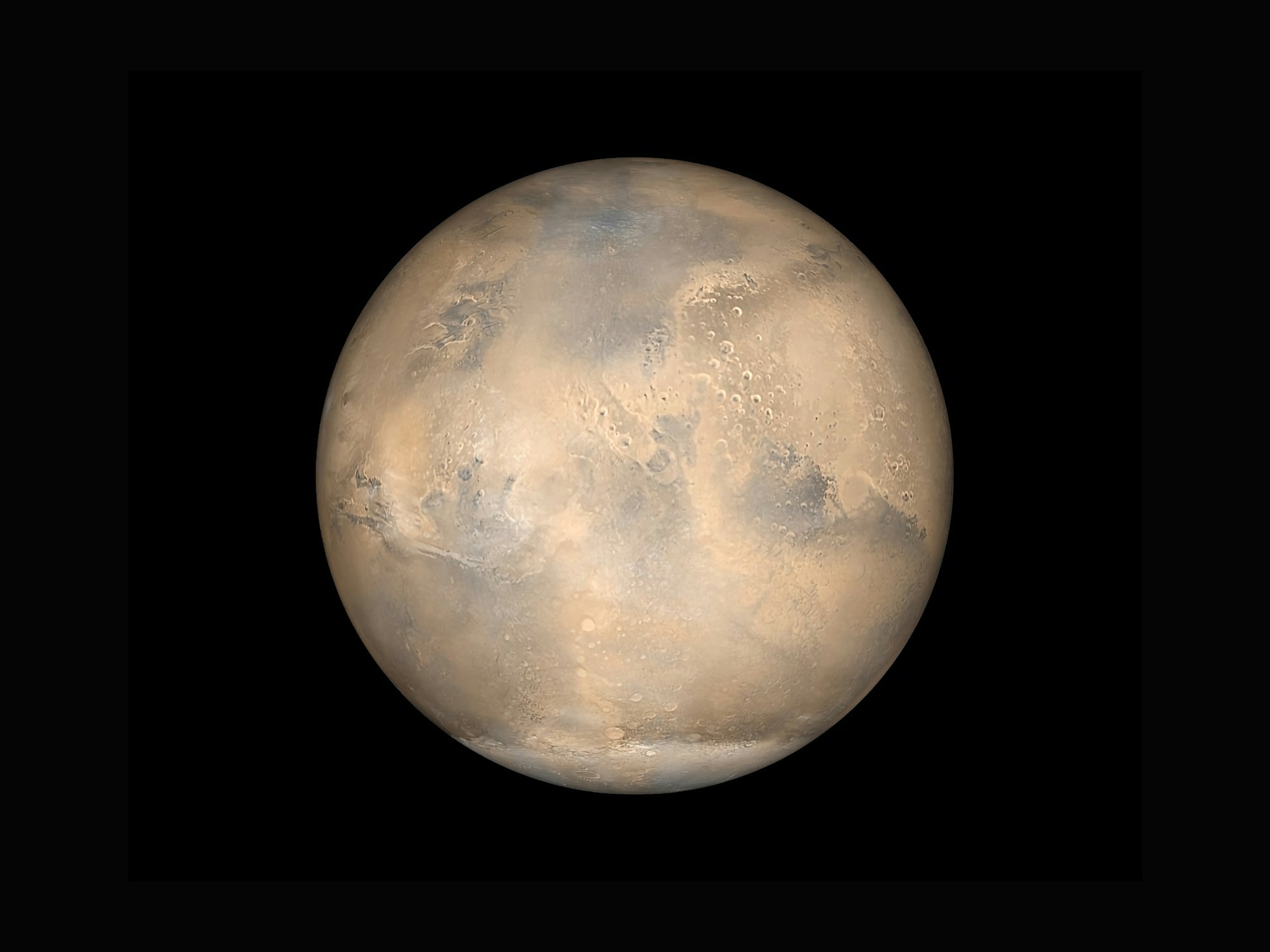

The distance between the Earth and Mars, for example, means that direct and instant communication with rovers is impossible. Depending on the position of Mars in relation to Earth, it can take between three and 22 minutes to transmit signals to a rover.

An artificial intelligence system called Terrain Related Navigation (TRN) helped NASA’s rover Perseverance descend and land on Mars with ease. Also referred to as Landing Vision System (LVS), this system offers position measurements in relation to known landmarks on the surface so it can increase inertial navigation.

Perseverance’s camera took images and analyzed them in real time when the rover was descending to its landing site. The images were then correlated to the landing site map on board the rover thanks to Light Detection and Ranging (LIDAR) technology.

Comparing the images taken by the camera with the onboard map, the TRN automatically corrected the rover’s trajectory.

Asteroid detection

Image processing, a subfield of computer vision, uses image enhancement, image identification, Gaussian stretching, and linear stretching to identify and classify new asteroids. In image processing, two-dimensional matched filters are often used to improve the signal-to-noise quality of X-ray findings.

Matched filter processing offers a significant performance gain when it comes to stellar image processing for asteroid detection, recovery, and tracking. The software can detect 40% more asteroids in high-quality Spacewatch imagery compared to current approaches – which are based on Moving Target Indicator (MTI) algorithms.

Legacy sensors and optics have improved detection sensitivity, allowing for the surveillance of near-Earth asteroids (NEA) of smaller sizes.

Tracking and surveillance of space debris

The Department of Defense’s global Space Surveillance Network (SSN) tracks over 27,000 pieces of space debris. Space debris is both artificial (or human-made) orbital debris and naturally occurring meteoroid debris.

With more than 23,000 of these pieces orbiting the Earth larger than a softball and traveling at speeds of up to 17,500mph, it’s vital to track them to prevent spacecraft from being damaged.

Computer vision monitors the debris and finds ways to reduce it by:

- Using real-time video analysis to monitor the operational activities of spacecraft.

- Detecting and tracking debris using computer vision models.

- Using pre-trained machine learning models to predict future dangers during missions.

Follow us on LinkedIn

Follow us on LinkedIn