The idea of creativity is often solely associated with humans.

Our ability to draw, write, and innovate is rivalled by no other species. It’s what has allowed us to become as advanced as we are today.

But soon, we might not be the only ones with the skill of creativity. Computers are catching up, and they’re catching up fast.

Artificial intelligence (AI) has already given computers the ability to play Atari games and write like Shakespeare. They’ve even been able to create their own music and art.

Creating art and composing music takes years for many to master. It requires consistent effort and dedication for a human to become skilled at performing such tasks.

But computers can learn such skills in hours.

It’s remarkable that a computer can learn extremely complex skills in such a short time, but how do they go about doing this? To understand the process, let’s observe how a computer can be taught to create its own handwritten digits.

Behold, the Variational Autoencoder!

Imagine conveying the plot of a 500-page fiction novel in ten words. Having to convey so much information in so few words sounds impossible.

But computers can perform similar feats relatively easily using variational autoencoders!

A variational autoencoder, or a VAE for short, is an AI algorithm with two main purposes — encoding and decoding information. Although it isn’t their primary purpose, they can also generate new information using this encoding and decoding ability (more on this later).

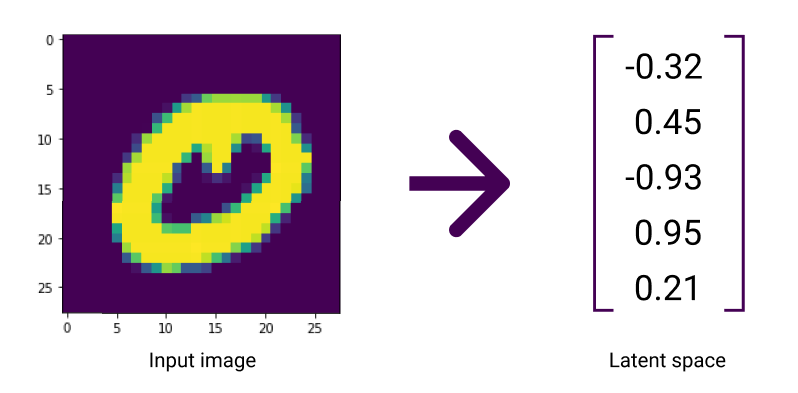

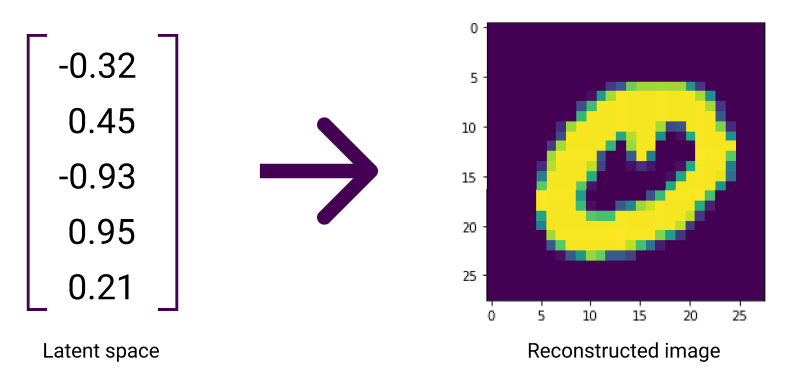

When encoding information, the VAE maps large amounts of information to smaller representations. This compressed representation of information is called the latent space of the VAE, as the original information is hidden in this compressed representation.

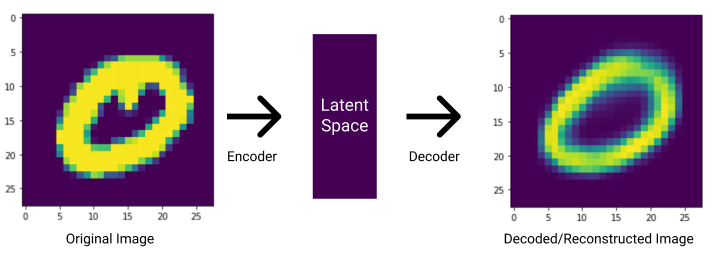

Considering the example of encoding and decoding images of handwritten numbers, the VAE would take in an image of a handwritten number and compress it into a latent space.

What’s cool about the VAE, though, is its ability to work backwards. It can reconstruct the original input image by observing the latent space. In other words, the VAE decodes the latent space to reconstruct the input.

Being able to condense and reconstruct images is super cool, but not easy to do. The VAE has to teach itself how to properly map from an input image to a latent space. Because they’re really good at learning such mappings, we typically train an AI algorithm called a neural network to work as a VAE.

A Brief Introduction to Neural Networks

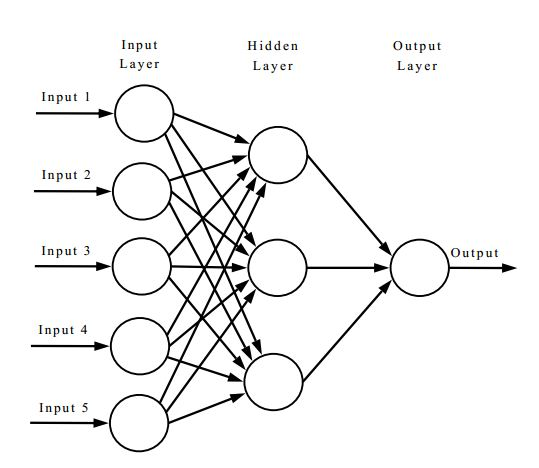

A neural network is really good at identifying patterns from data. It’s typically visualized as a series of interconnected circles called nodes.

Each column of nodes is collectively referred to as a layer. Information usually enters a neural network through the leftmost layer, which is called the input layer.

The information then gets passed to the middle layer, or hidden layer. More hidden layers in a neural network allow for the algorithm to learn more complex patterns in the input data.

After using the hidden layers to identify these complex patterns, a final output layer returns the neural network’s findings.

The way in which the input, output, and hidden layers are arranged is referred to as the neural network’s architecture. Different architectures can allow neural networks to perform different tasks. Because our VAE is created to encode and decode information, it requires a very specific neural network architecture to function.

Constructing the VAE

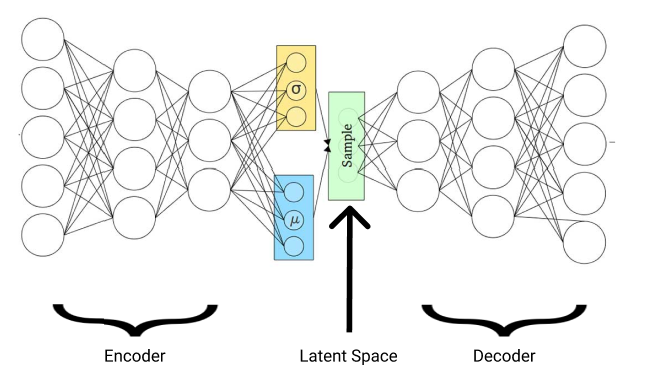

The VAE’s architecture consists of two parts — the encoder and decoder.

The encoder is utilized for mapping the input to a latent space, while the decoder maps the latent space back to the original input.

Because the decoder is used to reconstruct input information, it’s built as the exact opposite of the encoder. Putting the encoder and decoder together makes the VAE’s signature bowtie-like shape.

However, in the VAE, the encoder doesn’t directly map to the latent space. Rather, it first uses the input data to output two quantities called mu (the u symbol) and sigma (the o symbol).

Mu and Sigma

Let’s say we have two images of the number 7, both of which are fed to the VAE’s encoder. The latent spaces of both of these images should theoretically be similar, as both images contain the number 7.

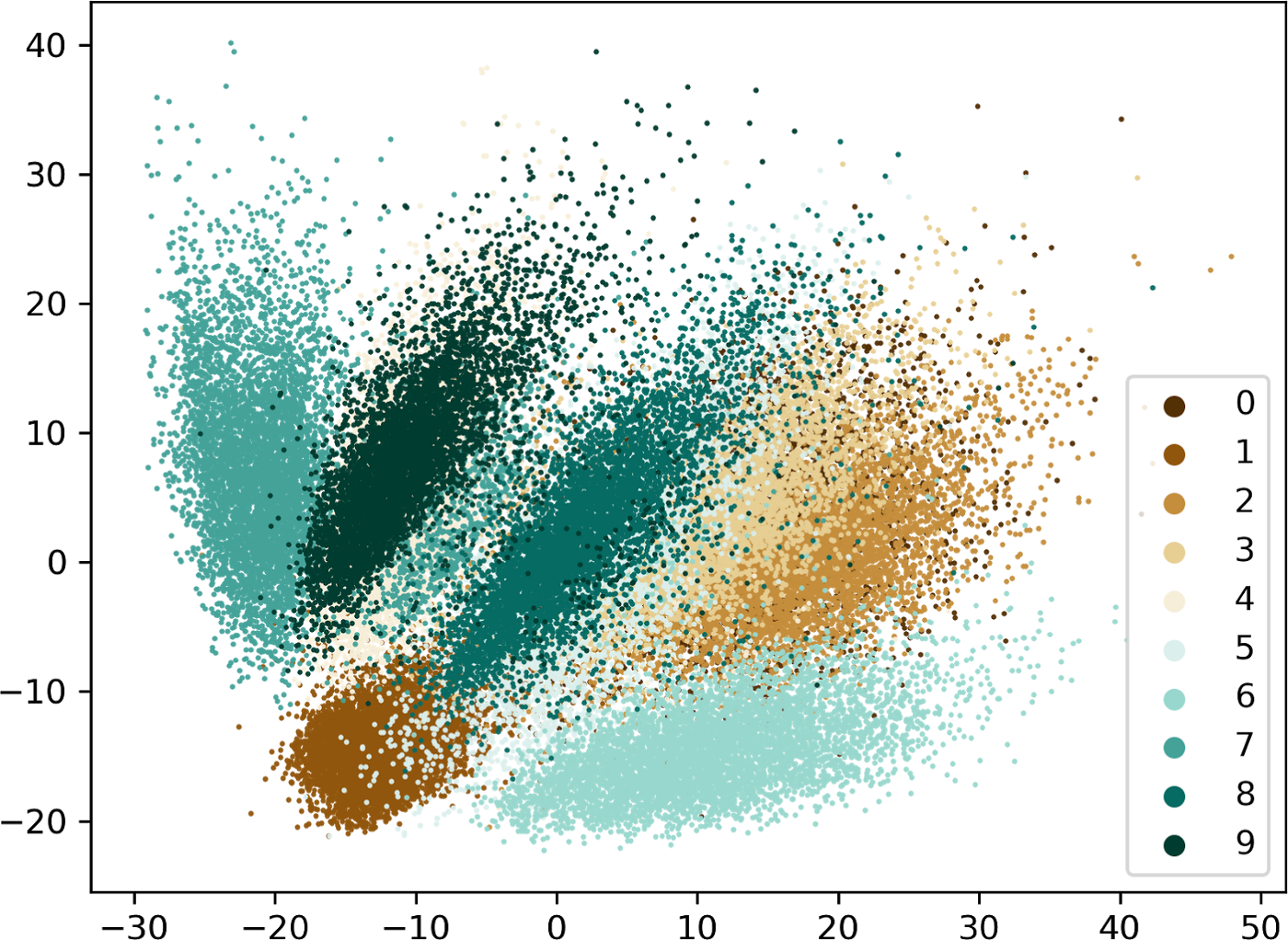

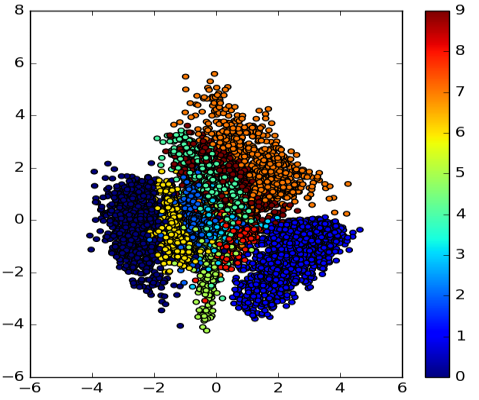

Surprisingly, though, that’s not the case. Visualizing encodings of handwritten number images showcases this phenomenon.

Notice how scattered the encodings of the same numbers are. The points are quite distant from each other.

This is bad news for our VAE. The VAE’s encoder should give similar encodings to similar images. By doing this, the VAE’s decoder can more accurately reconstruct the original image from the latent space.

If one specific encoding is generated per input image, however, the VAE’s decoder will get overwhelmed. It’s like trying to memorize the exact definitions of a million different words; the decoder cannot possibly learn how to deal with millions of specific encodings — the more general, the better.

To ensure that the decoder doesn’t have this problem, the VAE utilizes mu and sigma.

Think of mu and sigma as the VAE’s way of creating more general encodings. Utilizing mu and sigma allows for two distinct images of the number 6 to have similar encodings.

The generality of the encodings with mu and sigma can be seen when visualizing various latent spaces.

Now, images of the same numbers have encodings that are clustered together. The decoder won’t have to undergo the monumental task of learning how to decode millions of specific encodings.

But, unfortunately, the VAE isn’t created with the ability to create a meaningful mu and sigma that can successfully create more general encodings. It can’t even properly decode a latent space. Our VAE needs to train itself to efficiently perform these tasks.

Training the VAE

Every AI needs a way to critique itself. This includes our VAE; it cannot improve without knowing how to improve. To understand its mistakes and figure out how to improve itself, an AI uses a mathematical function called the loss function.

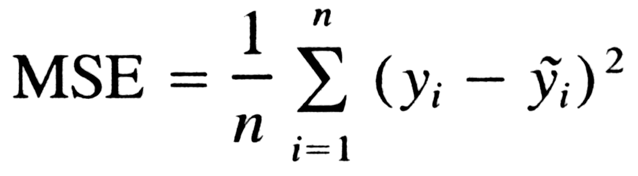

Thus, our VAE needs a loss function in order to learn how to properly function. It employs a very specific kind of loss function called the reconstruction loss.

The reconstruction loss measures the difference between an original image and a reconstructed image. A higher reconstruction loss corresponds to a higher lack of quality in the reconstructed image.

By aiming to minimize the reconstruction loss, the VAE can understand why its reconstructed images are missing the mark. With this knowledge, the VAE can tweak itself and flawlessly reconstruct input images!

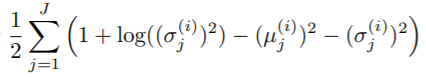

However, the reconstruction loss does not help with learning a better mu and sigma. Improving these quantities requires the use of an additional equation called the Kullback-Leibler Divergence, or KL-Divergence, which is used to learn a mu and sigma that can effectively create more general latent spaces.

Armed with the KL-Divergence and the reconstruction loss, the VAE is ready to learn! Here’s what the final training process would look like if we wanted to train a VAE to encode and decode images:

- The VAE first takes in an input image, which it encodes into a mu and sigma.

- The mu and sigma are combined to create a latent space.

- The VAE uses its decoder to reconstruct the input image from the latent space.

- The reconstruction loss and KL-Divergence are calculated using the reconstructed image, input image, mu, and sigma.

- Using the reconstruction loss and KL-Divergence values, the VAE slightly tweaks its encoder, decoder, mu, and sigma.

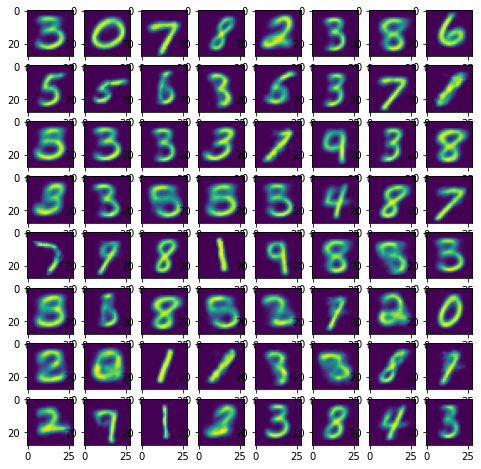

This process occurs repeatedly; the VAE encodes and decodes thousands of images, continually improving itself until, eventually, the VAE becomes really good at encoding and decoding images.

Generating New Handwritten Numbers With the VAE

While it may seem that the VAE’s sole purpose is for encoding and decoding data, it can also be used to create new data!

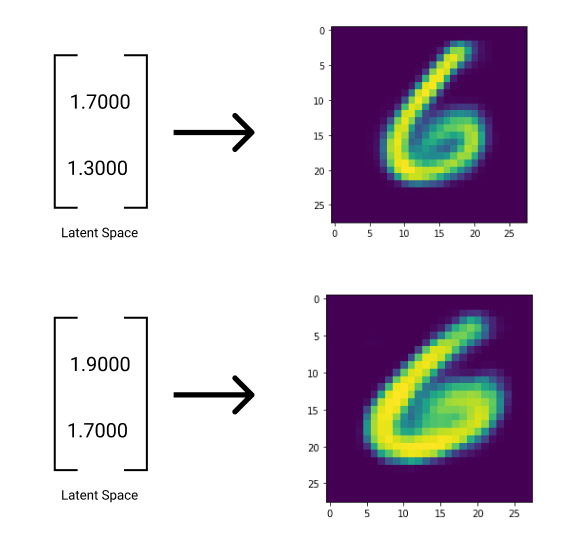

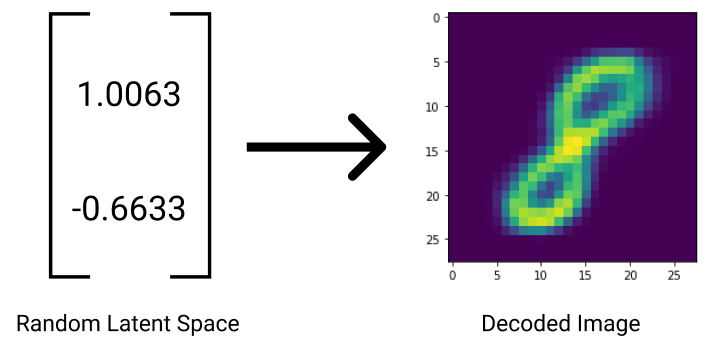

Because the VAE is good at decoding general latent spaces, it can actually decode random latent spaces as well. Picking random numbers for a latent space and feeding them to the VAE’s decoder gives some pretty interesting results!

The generated image will sometimes be fuzzy or even incomprehensible due to the use of a random latent space. You never know what kind of image the VAE will create!

With the VAE, computers can begin to create their own handwritten numbers. And this is only the start. VAEs are beginning to express their creativity further, performing feats like creating their own clothes. Computers are becoming increasingly creative, and VAEs are playing a big role in furthering that creativity.

Follow us on LinkedIn

Follow us on LinkedIn