We caught up with Sam to discuss what Flex Logix does, what the InferX platform is, how both the company and the platform differ from the competition, how easy it is to port models to the InferX platform, and more.

Medical imaging

Safety and factory inspection systems

Robotics

Transportation systems

Smart cities

Security systems

Q: Tell us a little about Flex Logix and the InferX platform.

A: Flex Logix is a company that was founded in 2014, so it's getting close to eight years old now. The company was built on a new approach to field-programmable gate array technology, which is a technology designed to allow for hardware logic to be reconfigured.

Typically, most silicon logic can’t be changed once it's manufactured. It will only do what it was designed to do, and you can't change it. However, field-programmable technology allows you to reconfigure the way that logic works after it's deployed in a system in the field.

So, that technology is increasingly used in a variety of devices. We actually license that as IP, or intellectual property, and a company that's building a chip will say “I want to include some FPGA logic within the larger chip I'm building”, and license that IP from Flex Logix, and we’ll support them in using our IP in their chips.

We had some customers who were looking at this technology and really believed that it could be well applied to artificial intelligence (AI) workloads. We had discussions with several companies on that front, and as we looked at it, we recognized that it does have value in AI and machine learning-type workloads.

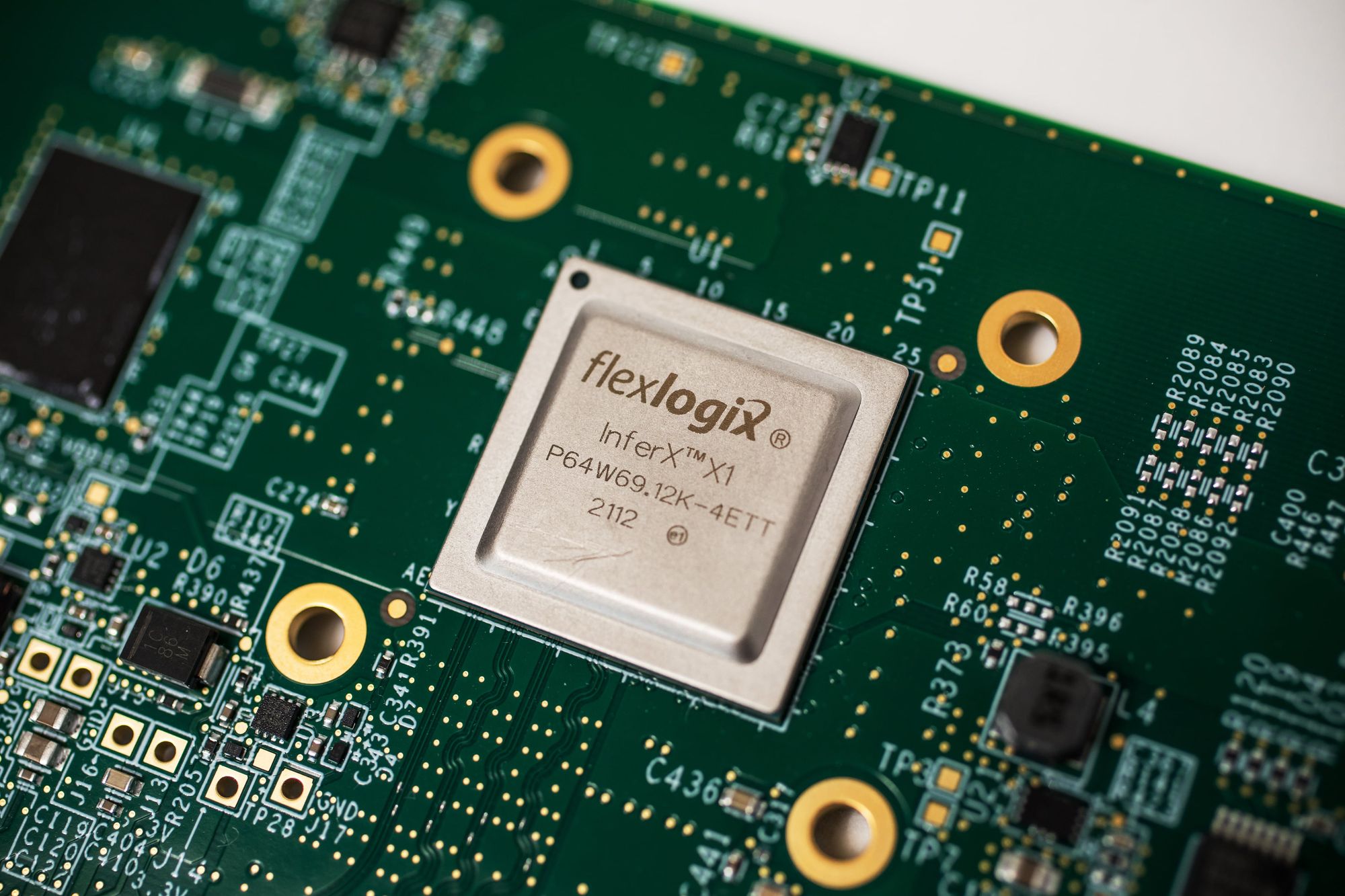

Our CTO, Cheng Wang, spent about two years architecting a new approach to AI. It leverages this FPGA (field-programmable gate array) technology, but combines it with these tensor processing arrays. The combination of very efficient tensor processor math units with the reconfigurable logic is what we call our InferX platform. It's designed to support inferencing workloads. In the machine learning world, there's the model development and training phase, which is essentially teaching a model what it is it needs to do.

And, once it's taught, then you can actually deploy it, and you use the training that it received in deployment. The training aspect of most machine learning techniques now takes place in very large systems, generally running in cloud environments. For example, you could be running on some kind of supercomputer or data center hardware for a week or more to train a model with all of the data that you're using for training.

However, once you've completed that work, you're able to export a model that’s able to run in a much lower power and much smaller environment for what we call inferencing, which is the use of what was learned. It’s operationalizing or using what was learned during training.

We were talking earlier about these transcription processes*, where a system will transcribe what it hears into text. The process of teaching a system how to understand English is a very computationally-intensive problem. But when you actually deploy that trained transcription model on a system and do transcription, it’s a much lighter problem. It's still very math-intensive, but much lighter than the training. So there's the separation of the training requirements from the inferencing requirements.

Our InferX technology is very much focused and very efficiently designed for inferencing at the edge - very low power, very small form factor systems that aren’t designed to be part of a large-scale cloud environment.

*Sam is referring to a pre-interview conversation we had about the transcription software process involved with interviews.

Q: What sets InferX apart from the competition?

A: I think that most of our competition is leveraging other forms of technology, like graphic processing units (or GPUs). Those graphic processor units have a lot of math capability so they can process 3D graphics, meaning they’re very math capable for that fun problem. However, from a semiconductor perspective and a toolchain perspective, they really are designed for gaming, essentially for rendering polygons on a screen. They're very well optimized for that.

They've had 15 or 20 generations of evolution to really solve those problems very well. They're being applied to these machine learning problems because they exist, and people have developed software frameworks around them. But frankly, they're not very efficient for the machine learning applications because they’re generally very expensive, and they're very high power.

Gamers build these big rig systems, where they have extra power, extra fans, and other things because the power dissipation is so high. But whether you’re gaming or doing ML inference, you still need to build out a large system to support the power dissipation of the GPUs.

In contrast, we're really focused on very efficient, very low power execution of the same kinds of workloads by being able to process at the same level as a GPU on an inference workload, but very much focused on efficient inferencing.

No one would ever use our device for graphics processing. However, for the inference workloads, we’re a very, very efficient and very capable solution. That's the main difference: our products are very much focused on solving this problem that we think that machine or computer vision-type applications, or AI at the edge, have. AI at the edge is growing into a very large market and one that will demand specialized and very targeted silicon solutions to satisfy the customer's needs for lower cost, lower power solutions.

Q: How does the InferX platform differ from GPU solutions?

A: There's a couple of differences. One is that GPUs are very well optimized around the GPU rendering pipeline, so they actually have a lot of extra hardware on the chip that is designed specifically for ray tracing and pixel processing. There's a lot of work there and there's actually a lot of bandwidth required.

Most GPU systems have tremendous DRAM bandwidth requirements. In fact, there's a whole other category of memory technology that's developed specifically to meet the bandwidth requirements of GPUs, GDDR (Graphics Double Data Rate), which are specifically designed to support graphic bandwidth requirements.

Whereas with our device, the bandwidth requirements for machine learning are not as high as graphics. We can actually design a system with low-power DDR. There are various DDR flavors. The low-power DDR, for example, is the kind of memory that's in your cell phone; it's much more of a commodity with much lower costs.

We can use that and the on-chip memory within our own device, and we can build a much smaller, lighter weight system that still achieves a very high level of performance and throughput. The die size itself, for a comparable level of performance and depending on what we're measuring against, is a seventh to a 10th of the size of the silicon die area for a GPU.

This means that there are significant cost savings as you're building up a silicon wafer, as you get 10x as many devices for an InferX out of a single silicon investment, which obviously will translate into lower prices. The smaller silicon area will translate to much lower power, meaning there's a lot of reasons why we're very different compared to a GPU.

GPUs are also traditionally delivered on very large PCI cards. In fact, they keep getting bigger and wider because of the need to address thermal issues by using more fans and bigger heatsinks. GPUs have big fans that have to take the heat away because when we do math in silicon chips, we generate heat, which is kind of a byproduct of processing.

If you don't get the heat away, then ultimately the chip stops working. There's a lot of space and area that's also required. From the perspective of what we're doing, our devices are smaller, the boards that we put them on are much smaller, and the power requirements are much lower than what you'll see with GPUs. We’re also really focused on solving an inferencing problem, as opposed to a graphics problem.

Q: How important is software with inference solutions and how is Flex Logix addressing that?

A: The concepts of hardware and software still exist in the inferencing and machine learning world. Software is much more important in the model training and development phases. What ends up happening, for example, is that you have a model for object detection. Object detection is when you present an image to a computer system, and it's able to pick out faces, cars, trees, and more. It's able to recognize them based on what it learned before and draws boxes around them or generates a list of what it perceives with associated probabilities. The results are then passed to more traditional software programs for further processing.

A really important object detection model is the YOLO Family. The most recent version of that is called YOLOv5 and it's a really powerful system for doing object detection. We're able to run these various YOLOv5 models at full speed, with real-time processing. These powerful models, when properly accelerated, can support 30 frames per second of processing and identify things in real-time. Yolov5 is implemented in Pytorch. It’s a Python programming language with a ML library called Torch, and there's a whole set of development tools and environments that are used to both test and develop that model, and to train that model on a large-scale computing infrastructure.

As you can see, there is lots and lots of software development in that phase of the model. And, then, you get to the point where you say, “okay, now that I've got my model trained and it works, I've taught it all the things it needs to know.” And, although it's represented as software, it can be reduced to what is called an execution graph. It's essentially a graph of operations that have lots of this kind of weights and biases associated with them. There's a bunch of numbers and a bunch of operations.

There are techniques that the industry has developed to represent the computation of that machine learning model in a descriptive format. The one that the industry uses, by and large, and the most well accepted is something called ONNX, which stands for Open Neural Network eXchange. It's a description of the model and it describes all the operations and processing associated with that.

There are lots of ways you can develop many different toolchains for developing models. We talked about PyTorch, but there’s also TensorFlow and Caffe. There are these various frameworks that have existed, but virtually all of them have the ability to export what we call a trained ONNX model. That's a mathematical representation of all of the math and all the dependencies that that model represents.

We can take that ONNX representation and convert that into the executable program that runs on our device. There's a lot of software and a lot of processing required to convert ONNX to our executable format, and we have to develop that. However, from a customer perspective, once the model is trained, the process of converting that trained model to what runs on our device is really fairly simple.

We don't ask customers to develop models specifically for InferX. We essentially say: “If you have a trained model, we can take that trained model and port that to our InferX accelerator.” Now, the models have a set of operators and there are certain assumptions about the model that we have to support within our hardware. We're also continuing to expand the support for that every day as we continue to work with customers and develop our technology.

However, we're really not asking customers to program our InferX device. We're saying that if they have a trained model, we can take that trained model, which is abstracted from the devices that it runs on, and we can port that onto our device. That is a different concept than saying “Hey, you program for our device.” It really is taking this model and creating a very simple path for taking the model and being able to run it at scale. This means you do it once and then, if you deploy 100,000 units, you just take that and replicate it across those devices. It’s very simple to do that.

Q: How easy is it to port models to the InferX platform?

A: There are a couple of things that we should talk about on that front. One is that generally, when models are trained, they use a 32-bit floating-point number format, and when we do inferencing, we almost always will convert that into an 8-bit integer. It helps to support the smaller die size and lower power, and what we find is that we have a very small loss of accuracy when we do that.

There are sets of tools that we provide to help the customer understand how much accuracy is being lost in the conversion to 8-bit integer data. Generally, it's less than 1%. If your accuracy was 92%, then it would reduce that to maybe 91%. However, you get real value out of the conversion in terms of being able to reduce the size and power of the solution, which you have to pay attention to.

Then we work with customers to make sure they're satisfied that the results meet their expectations. Generally, customers will come in and they'll have certain expectations in terms of this throughput and the accuracy that they expect for that model.

Q: Are there boards available to help customers get to market quickly and easily?

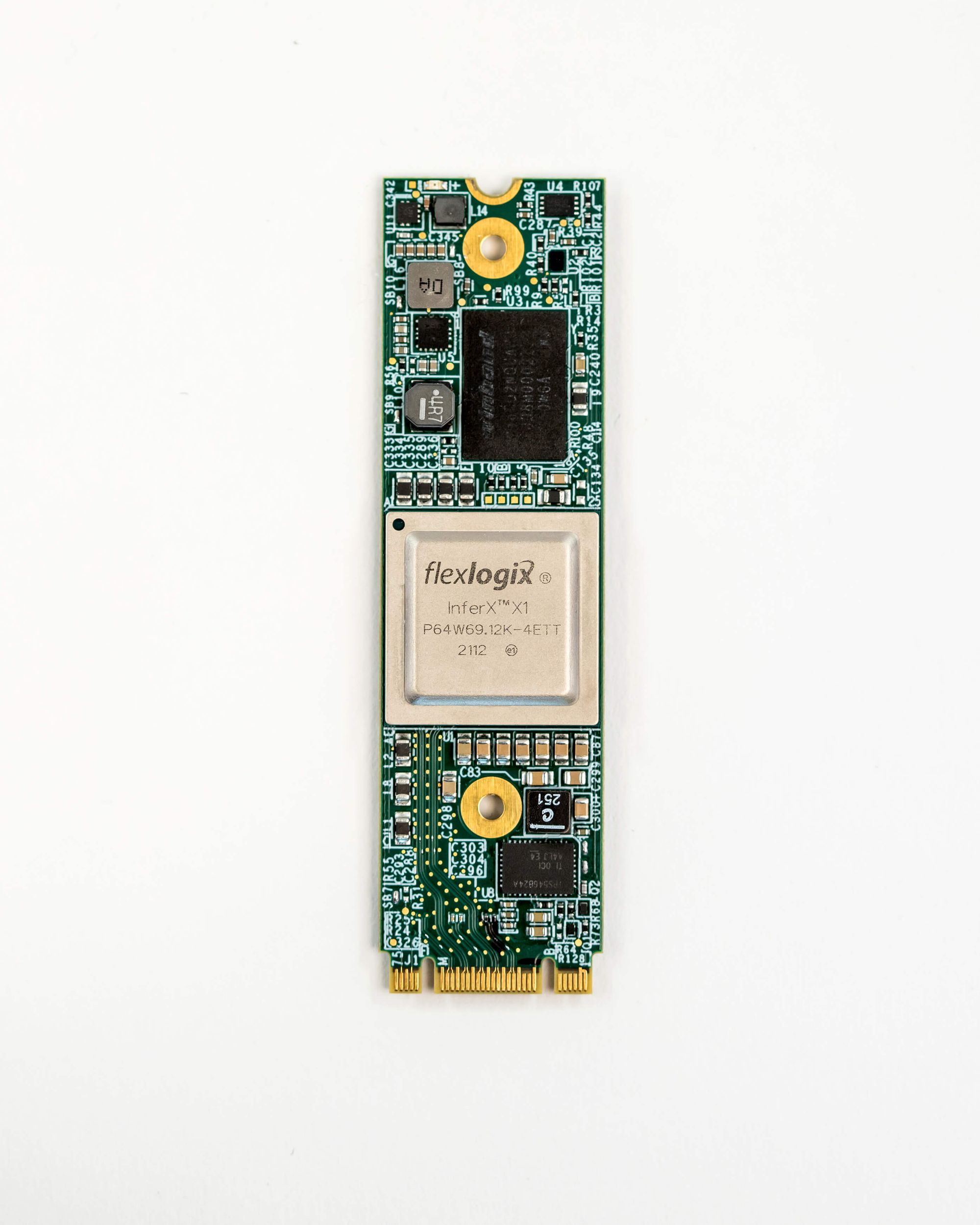

A: We currently have both a PCI board as well as what's called an M.2, which is a small form factor board the size of a stick of gum. We have both of those available, which can be put into just standard computer systems. Most computers, other than a notebook, will have a slot for a PCI card. We also have these M.2, which will fit within smaller form factor boxes, like a NUC or an industrial machine.

Both of those are available now and we're happy to take inquiries from customers looking to test and run their workloads, and start the discussion with us about how we can help solve their problems and compare the InferX technology to what they're using now.

A lot of times, people won't actually use a GPU, they'll use a kind of CPU-based system. In those cases, especially for vision models, we can bring a significant speed-up versus what they're doing now. Versus GPU systems, we’re really about bringing a much more efficient, smaller solution to bear that's easy to work with. That's the approach we want to take.

Q: What is your vision for InferX in the next 5 years?

A: We're continuing to develop technology, but I don't have anything to announce right now. We’re investing in future product lines and want to help customers solve their problems. We see the performance of this technology continuing to grow, and we're working hard on that.

We see much broader adoption of machine learning at the edge and computer-based systems that rely much more on vision, something that hasn't been possible in the past. That's something that we can solve now. It really is what will bring a lot of positive changes to our world, and this will happen as this technology continues to mature and develop.

We see ourselves as a very large player in the market for AI-based on the strength of our technology. There is a real important demand in the market for vision systems that will make our lives better. We see lots of opportunities to improve the welfare and standard of living of mankind by helping our computing systems see and interact better with the environment. I think that's a noble endeavor and we want to do our part in making something that can be broadly used.

American technology company Flex Logix provides industry-leading solutions that enable flexible chips and accelerate neural network inference. It develops and licenses programmable semiconductor IP cores and offers a line of inference accelerators targeted at edge vision applications. Flex Logix’s technology offers cheaper, faster, and smaller (or the most efficient) acceleration technology that also uses less power when compared with current GPU-based solutions.

Want to hear more from Sam? Get your ticket for AIAI’s Computer Vision Summit on April 27, 2022, in San Jose, California, and attend his panel live.

Follow us on LinkedIn

Follow us on LinkedIn